Reinforcement Learning for AI Memory Systems

Our first HumemAI research paper, A Machine with Human-Like Memory Systems, did not use machine learning. Instead, all memory management policies were handcrafted. While these handcrafted functions worked well, they lacked adaptability to new environments.

To address this limitation, we introduced reinforcement learning (RL) in this second paper. RL, one of the three pillars of machine learning—alongside supervised and unsupervised learning—operates on a fundamentally different objective: reward maximization. Unlike maximum likelihood estimation, RL allows agents to learn strategies that maximize long-term rewards, often leading to superhuman behaviors, such as those demonstrated by AlphaGo.

To evaluate our memory management system, we introduced a new toy environment: RoomEnv-v1, which is more complex than its predecessor, RoomEnv-v0.

Abstract

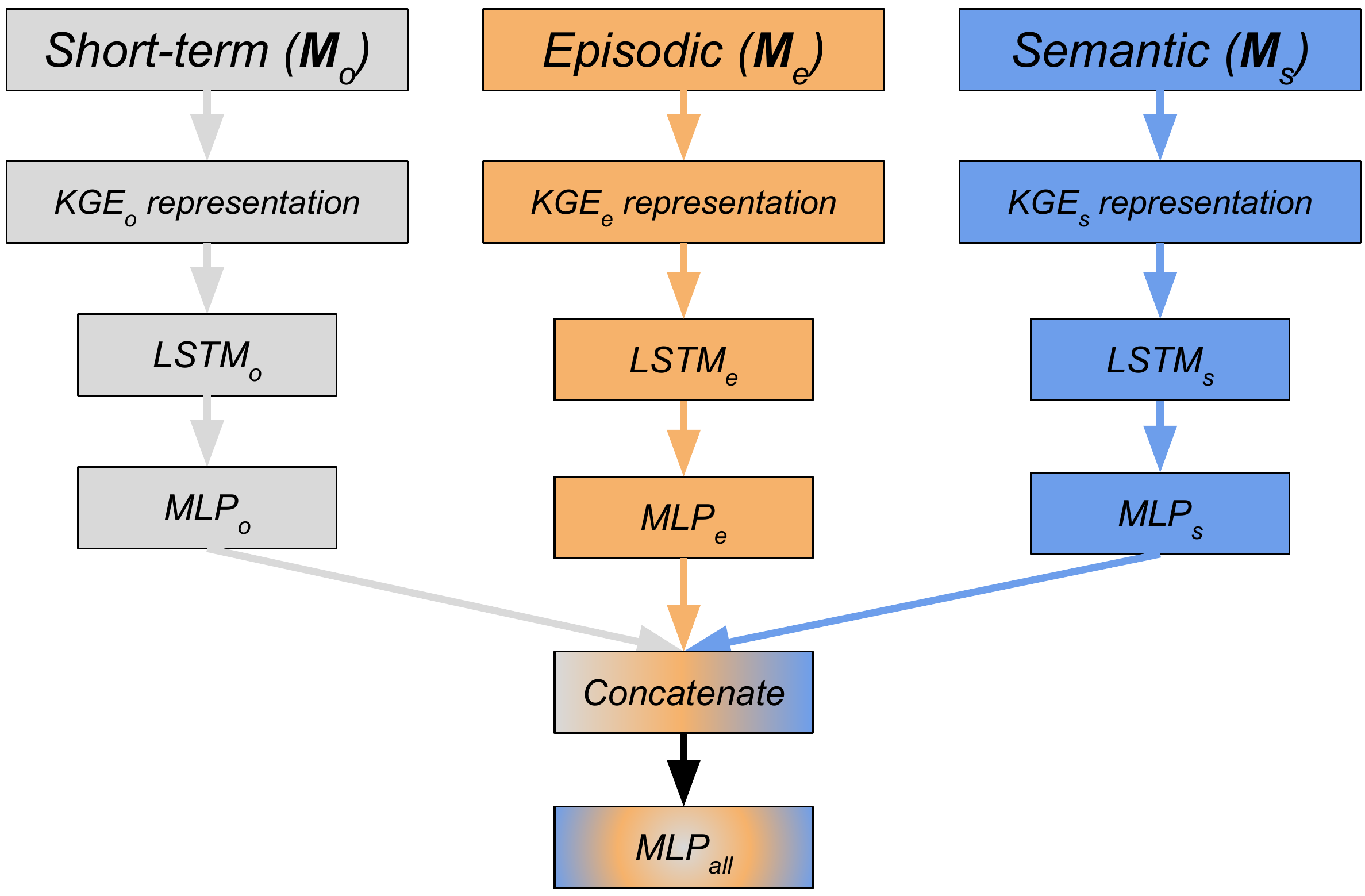

Inspired by the cognitive science theory of explicit human memory systems, we modeled an agent with short-term, episodic, and semantic memory systems, each represented as a knowledge graph. To evaluate this system, we designed and released our own reinforcement learning agent environment, “The Room”, where an agent must learn how to encode, store, and retrieve memories to maximize its return by answering questions.

Our deep Q-learning-based agent successfully learns whether a short-term memory should be forgotten or transferred into the episodic or semantic memory systems. Our experiments indicate that an agent with human-like memory structures outperforms an agent without such memory mechanisms in this environment.

Read the Full Paper

Check out the full research paper on arXiv: https://arxiv.org/abs/2212.02098.